The Ultimate Guide to Caching in System Design

Learn about caching and how it improves system performance. Discover caching techniques, benefits, and how to implement caching effectively in your system design

Introduction:

Ever wondered how websites and apps load so quickly? A lot of it comes down to caching. Caching is a technique that stores frequently accessed data in a temporary storage location so that it can be retrieved faster than if it had to be fetched from the original source each time. By reducing the time it takes to access data, caching not only speeds up performance but also helps in managing server loads more efficiently. Let’s explore how caching works and how you can use it to enhance your system’s performance.

What is Caching?

Caching is a method that expedites retrieval times by temporarily storing data that is often retrieved. Caching prevents the need to continually retrieve this data from the original source, therefore enhancing system performance by keeping it easily accessible.

It's similar to having a frequently used cheat sheet of info on hand for easy access. Caching temporarily saves information so you can access it more quickly rather of having to look it up every time you need it. Consider it as a clever, blazingly quick memory for your server or PC. For instance, when you visit a website, your browser might save images and other content so that it loads considerably faster when you return. Having frequently used data handy is a basic concept that has a big impact on how quickly and effectively systems operate.

- In-Memory Caching:

- This type of caching stores data directly in RAM, making it incredibly fast to access. Tools like Redis and Memcached are popular choices for in-memory caching. Redis is kind data structure database while Memcached is key value db.

- Ideal for applications requiring quick data retrieval, such as session management and frequently accessed data in web apps.

- Disk Caching:

- Data is stored on a hard disk or SSD instead of RAM. While slower than in-memory caching, it’s more persistent and can handle larger volumes of data.

- Useful for caching files and data that don’t need to be accessed as quickly, like static website resources or large data files.

- Distributed Caching:

- This approach involves spreading cached data across multiple servers or nodes. It enhances scalability and reliability by distributing the load.

- Best for large-scale applications where a single cache might not be sufficient or reliable, such as cloud-based services like Amazon ElastiCache.

- Improved Performance:

- Caching drastically speeds up data retrieval times. Imagine visiting your favorite website and instantly seeing the latest news without any loading delays. That’s the power of caching in action.

- Reduced Load:

- By storing frequently accessed data closer to the user or application, caching reduces the number of requests to your database or server. This helps in preventing overload and improves overall system efficiency.

- Cost Efficiency:

- Efficient caching reduces the need for expensive hardware upgrades and lowers operational costs. It makes the best use of existing resources, translating to savings in infrastructure costs.

Understanding Caching

Caching is all about speeding up access to frequently used data. Imagine you have a favorite book you read often; instead of looking for it in a large library every time, you keep it on a shelf close to you. Similarly, caching stores frequently accessed data in a temporary location, so it’s quicker to reach. It improves overall performance by reducing the time it takes to fetch data from a main storage source like a database or an external API.

How to store frequently used data ?

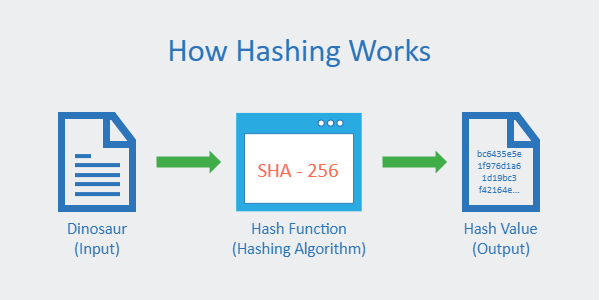

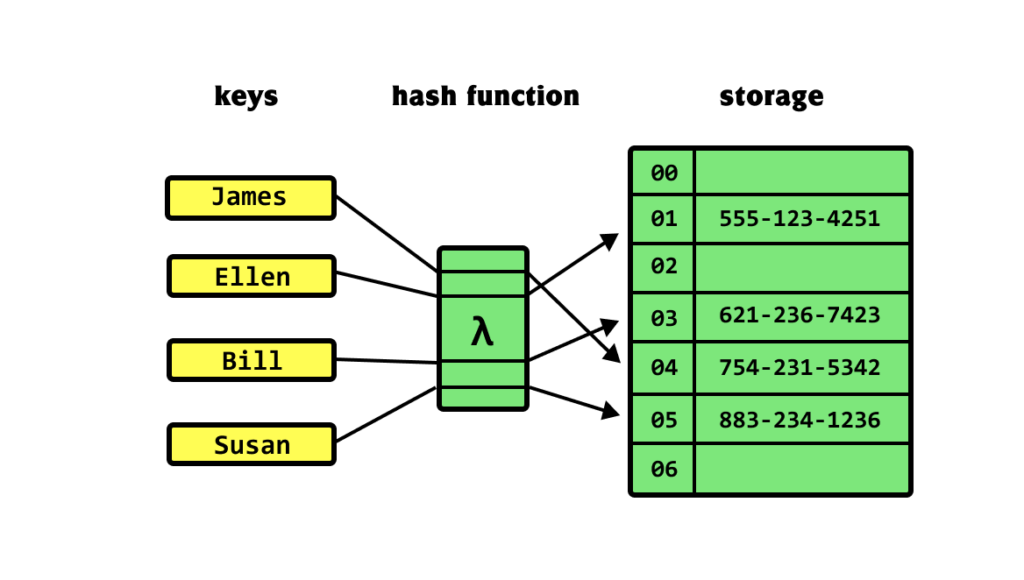

Think of a hash function as a unique code generator. It takes any input as data, such as a word or a sentence, and turns it into a unique string of characters often a shorter, fixed length.

These unique codes , called hash values, are crucial in caching because they act like addresses where specific data is stored. This makes it easier and faster to locate that data.

Using Redis for Caching with Hash Functions

Redis is an in-memory data store known for its speed, making it an ideal tool for caching. Let's consider an example of caching user data in Redis.

- Creating a Unique Hash Key: Suppose we want to cache data for a user with an ID. We’ll use a hash function to create a unique key for storing this user’s data.pythonCopy code

importhashlib# Example user data

user_id = "12345"

user_data = {"name": "John Doe","email": "john.doe@example.com","age": 30

}# Generate a unique hash key for the user data

hash_key = hashlib.md5(user_id.encode()).hexdigest() - Storing Data in Redis: With the key generated, we use the Redis library in Python to store this user data.pythonCopy code

importredis# Connect to Redis)

redis_client = redis.StrictRedis(host='localhost', port=6379, db=0# Save the user data in Redis

redis_client.hmset(hash_key, user_data) - Retrieving Data from Redis: When you need the data, use the same hash key to fetch it from Redis instantly.pythonCopy code

# Fetch user data from Redis

retrieved_data = redis_client.hgetall(hash_key)# Print the retrieved data(retrieved_data)

print

This example shows how you can generate a unique key using a hash function and store it in Redis, allowing for quick data retrieval.

Full-Text Search in MySQL

Full-text search in MySQL is a powerful way to search text data. It’s like having a super-efficient way to find words or phrases in documents, articles, or product descriptions. Unlike basic searching, full-text search can rank results by relevance, making it more accurate.

How to Set Up Full-Text Search in MySQL

- Create a Table with Full-Text Index:Let’s say you have a table called

productswith a column for product descriptions. You can set up a full-text index on this column to enable faster searching.sqlCopy codeCREATE TABLEproducts (id INT AUTO_INCREMENT PRIMARYKEY,name VARCHAR(255),

description TEXT,FULLTEXT(description) -- Adds a full-text index on the description column

); - Insert Some Data:Add some sample product descriptions.sqlCopy code

INSERT INTOproducts (name, description)VALUES('Laptop', 'A high-performance laptop with 16GB RAM and 512GB SSD storage.'),('Smartphone', 'A new smartphone with a large display, excellent camera, and long-lasting battery.'),('Headphones', 'Noise-canceling wireless headphones with superior sound quality.'); - Search Using Full-Text Search:To search for products related to "laptop," use the

MATCH ... AGAINSTclause.sqlCopy codeSELECTname, descriptionFROMproductsWHERE MATCH(description) AGAINST('laptop');

This query will return all products where the description contains the word "laptop."

Combining Redis Caching with MySQL Full-Text Search

To make your searches even faster, you can cache frequently requested search results in Redis. Here’s how this works:

- Step 1: When a search is made, first check Redis to see if the result is already cached.

- Step 2: If found in Redis (cache hit), return the result immediately.

- Step 3: If not found (cache miss), perform a full-text search in MySQL.

- Step 4: Save the result in Redis with a set expiration time.

- Step 5: Return the result to the user.

How to Implement Caching in Your System

- Choose the Right Cache Type:

- Decide whether in-memory, disk, or distributed caching is best for your needs. For quick data access, go with in-memory caching. For larger data, consider disk or distributed caching.

- Configure Cache Settings:

- Set parameters like expiration times (how long data should be cached), cache size (how much data can be stored), and eviction policies (rules for removing old data).

- Integrate with Existing Systems:

- Implement caching within your applications and databases. For example, integrate Redis with your web app to cache session data, or configure browser caching for static assets.

Common Caching Challenges and Solutions

- Cache Invalidation:

- Challenge: Keeping cached data updated can be tricky. If the underlying data changes, you need to ensure the cache reflects those changes.

- Solution: Implement strategies like time-based expiration or explicit cache invalidation to refresh stale data.

- Cache Consistency:

- Challenge: Ensuring that data remains consistent across different caches, especially in distributed environments.

- Solution: Use consistency protocols and synchronization techniques to maintain data accuracy.

- Cache Management:

- Challenge: Managing cache size and performance can be complex, particularly as data volume grows.

- Solution: Regularly monitor cache performance, adjust settings as needed, and consider using automated tools for cache management.

Case Studies: How Caching Improved System Performance

- E-Commerce Website Boost:

- An e-commerce site implemented Redis for in-memory caching of product data. The result? A significant reduction in page load times and a 20% increase in customer satisfaction.

- Media Streaming Service Enhancement:

- A media streaming service used disk caching for storing frequently accessed video content. This strategy cut down streaming buffer times by 50% and improved user experience.

Future Trends in Caching

- AI and Machine Learning Integration:

- The future of caching will likely involve AI and machine learning to predict data access patterns and optimize cache performance dynamically.

- Advanced Caching Techniques:

- Innovations in caching, such as adaptive caching and intelligent prefetching, will continue to evolve, offering more efficient and automated solutions.

- Enhanced Security:

- With increasing concerns about data privacy, future caching solutions will focus on incorporating advanced security measures to protect cached data.

Caching is a powerful technique that can dramatically improve your system’s performance by storing frequently accessed data for faster retrieval. By choosing the right caching strategy and addressing common challenges, you can enhance your system’s efficiency and reliability. Explore different caching methods and integrate them into your systems to reap the benefits. Feel free to share your experiences or ask questions about caching in the comments!